Vomo vs Otter: Why Whisper Models Win for AI Meeting Transcription in 2026

If you’re spending sixty minutes cleaning up a messy transcript after a sixty minute meeting, your productivity tool is actually a bottleneck. Finding a reliable AI meeting note taker is the first real step toward winning back your schedule. Most professionals are tired of fixing broken transcripts, correcting speaker labels, and rewriting summaries after every call. What teams need today is not just recording software, but an intelligent system that captures conversations accurately, organizes decisions, and turns discussions into usable information automatically.

In 2026, the conversation around AI transcription has changed completely. Teams no longer ask whether transcription works. They ask whether it works accurately enough to remove editing entirely. The modern standard is no longer basic speech recognition. The modern standard is reliable, searchable, decision-ready meeting intelligence.

That shift explains why the comparison between Vomo.ai and Otter.ai matters more than ever. Both tools promise automated meeting notes, but they approach transcription from very different technological foundations.

Today, high-fidelity speech models such as OpenAI Whisper and Deepgram Nova-2 have reset expectations for accuracy, multilingual understanding, and contextual processing. Instead of treating transcription as a convenience feature, modern platforms treat it as the foundation of knowledge management.

This article explains in depth how these systems differ, what actually affects transcript accuracy, and how to choose the right tool for your workflow in 2026.

Why Meeting Transcription Became a Core Productivity System

Meetings generate decisions, commitments, timelines, and strategy discussions. Historically, most of this information disappeared into memory or scattered handwritten notes. Digital recording solved the storage problem, but not the usability problem. Few professionals replay hour-long recordings to extract one decision.

Early transcription tools attempted to solve this by converting audio into text. However, early generation models struggled with overlapping speakers, accents, and domain-specific terminology. Many users still spend substantial time manually editing transcripts.

The new generation of AI transcription systems works differently. Instead of simply converting speech into words, modern models attempt to understand conversational structure. They recognize who spoke, detect contextual intent, identify action items, and separate casual discussion from decision-making moments.

This shift transforms meeting transcription from a passive archive into an active operational tool.

Rise of High Fidelity Speech Models

The real revolution in transcription accuracy did not come from user interface improvements or calendar integrations. It came from improvements in neural architectures for speech recognition.

Modern models such as Whisper and Nova 2 were trained on massive multilingual datasets that include:

- Real-world meeting conversations

- Multiple accent variations

- Industry-specific terminology

- Background noise scenarios

- Rapid conversational speech

As a result of this training, these systems no longer rely solely on phonetic matching. They apply contextual language prediction. If a finance team discusses EBITDA projections, the model recognizes domain vocabulary. When engineers discuss API latency, the model correctly predicts the technical structure.

This contextual reasoning is the key difference between modern transcription engines and older generation captioning tools.

Overview of Vomo.ai Precision Meets Intelligence

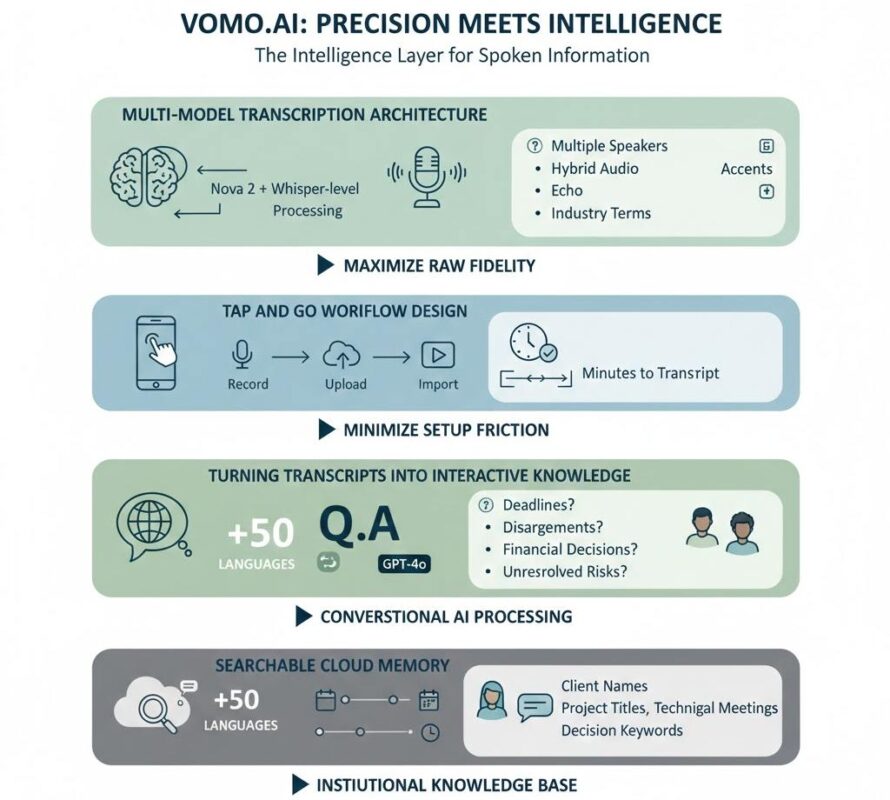

Vomo.ai positions itself not simply as a meeting recorder but as an intelligence layer for spoken information. Its architecture uses multiple recognition engines and advanced language models to maximize transcript reliability before any summarization begins.

The platform’s philosophy is straightforward. If the transcript itself is flawed, every summary, action list, or insight generated later will be flawed as well. Therefore, the system prioritizes raw transcription fidelity first.

Multi-model transcription architecture

Instead of depending on a single recognition engine, Vomo combines multiple speech recognition systems, including Nova 2 and Whisper-level processing. This layered approach increases accuracy in situations where traditional systems fail, including:

- Multiple people speaking simultaneously

- Hybrid meetings with remote audio distortion

- Conference rooms with echo

- Strong regional accents

- Industry heavy terminology

By resolving these issues at the transcription stage, the platform reduces the need for manual correction later.

Tap and go workflow design.

One of the major productivity advantages of modern transcription platforms is the removal of setup friction. Vomo focuses heavily on minimizing the number of user steps.

A typical workflow involves:

- Recording a meeting directly inside the application

- Uploading an existing MP3 or WAV file

- Importing an online video source

- Receiving a full transcript within minutes

The goal is to reduce cognitive overhead. Users should not need to configure integrations or deploy calendar bots before capturing information.

Turning transcripts into interactive knowledge

After transcription, Vomo applies conversational AI processing powered by OpenAI models such as GPT-4o. Instead of providing only static text output, the system allows users to interact with the transcript conversationally.

This means you can ask questions such as:

- What deadlines were assigned

- Which participants disagreed with the proposal

- Summarize only financial decisions

- List unresolved risks

The AI scans the full transcript and returns targeted answers. This dramatically reduces the time required to review long discussions.

Multilingual accessibility

Global teams often struggle with the reliability of transcription across languages. Many systems perform well in English but degrade significantly with mixed-language discussions.

Vomo supports more than 50 languages, enabling teams to transcribe multilingual meetings consistently. This capability is especially important for international organizations managing distributed workforces.

Searchable cloud memory

Another major productivity improvement comes from persistent searchable storage. Instead of storing isolated transcripts, Vomo builds a long-term searchable knowledge base.

Users can retrieve past discussions months later by searching for:

- Client names

- Project titles

- Technical topics

- Decision keywords

This transforms meeting recordings into institutional memory rather than temporary documentation.

Overview of Otter.ai: The Legacy Market Leader

Otter.ai played a major role in introducing automated transcription to mainstream business users. It gained popularity through early integration with remote meeting platforms such as Zoom and Microsoft Teams.

For many organizations, Otter was their first experience with AI-generated meeting captions and automatic note capture.

Strengths of the Otter platform

Otter still offers several useful capabilities, including:

- Live real-time captioning during meetings

- Automatic joining of scheduled calls through calendar syncing

- Basic meeting summaries and keyword highlights

- A familiar interface used by many long-term customers

For organizations prioritizing real-time captions during live calls, these features remain useful.

Where legacy systems encounter friction

However, the expectations for transcription accuracy in 2026 are far higher than when Otter first launched.

Common user challenges reported across legacy transcription systems include:

- Speaker confusion in rapid discussion

- Incorrect technical terminology

- Reduced accuracy with international accents

- Errors in overlapping speech

- Frequent manual transcript editing

When users must manually correct transcripts, the time savings promised by automation disappear.

Pricing and usage complexity

Another area where legacy systems sometimes struggle is pricing clarity. As platforms matured, feature tiers became more segmented. Some users encounter limits such as:

- Monthly transcription minute caps

- Restrictions on file uploads

- Advanced feature paywalls

While these models may suit occasional users, high-volume teams often prefer unlimited processing workflows.

Head-to-Head Comparison: Vomo vs Otter

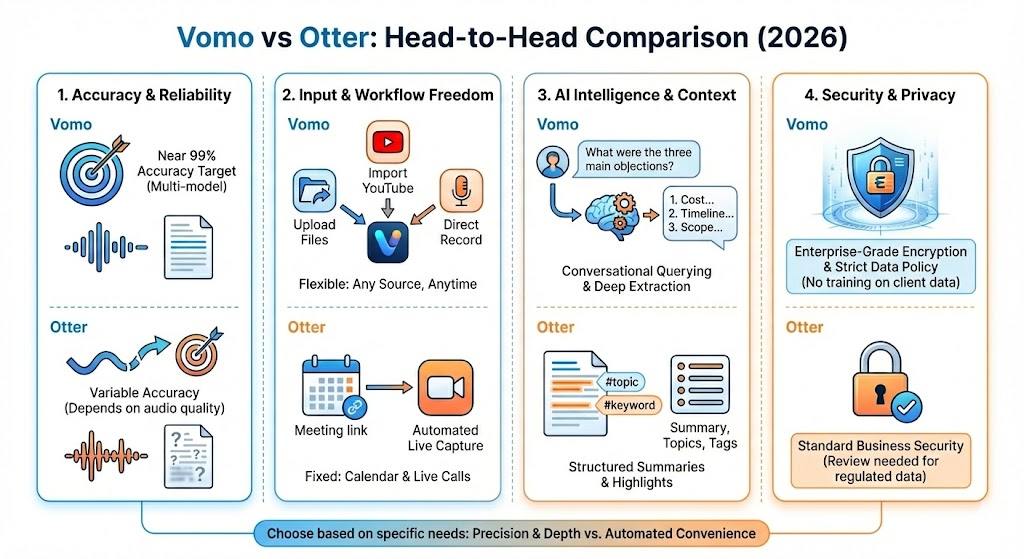

To make a realistic decision, professionals should evaluate four primary factors.

1 Accuracy and transcription reliability

Accuracy remains the single most important metric.

Vomo targets near ninety-nine percent accuracy through its multi-model recognition architecture.

Otter accuracy varies more significantly depending on audio quality, speaker clarity, and meeting complexity.

For environments such as legal documentation, research interviews, or technical planning sessions, higher baseline accuracy directly reduces labor costs.

2 Input flexibility and workflow freedom

Vomo follows a flexible input philosophy. Users can upload audio files, import YouTube videos, or record directly in the application.

Otter focuses more heavily on scheduled meeting integrations and automated live capture.

For professionals handling interviews, recorded podcasts, training sessions, or field recordings, flexible file handling often becomes more important than calendar automation.

3 AI intelligence and contextual understanding

Modern transcription tools are increasingly judged not only by speech recognition but by post-processing intelligence.

Vomo enables conversational querying of transcripts using GPT-powered processing. This allows deep contextual extraction of information.

Otter typically provides structured summaries, topic highlights, and keyword tagging rather than fully conversational analysis.

The difference becomes clear when reviewing complex strategy meetings. Conversational AI querying enables targeted insight retrieval rather than manual scanning.

4 Security and privacy protection

Sensitive meetings often involve confidential information such as financial forecasts, product designs, or legal discussions.

Vomo emphasizes enterprise-grade encryption and strict privacy policies for training data.

Organizations handling regulated information should always review security architecture before adopting any transcription platform.

Which Tool Fits Different Professional Use Cases

Choosing the right transcription tool depends heavily on how meetings function inside your workflow.

When Vomo is typically the stronger choice

- Researchers conducting long, recorded interviews

- Legal professionals requiring verbatim documentation

- Medical consultants documenting patient discussions

- Content creators repurposing podcast recordings

- International companies managing multilingual teams

- Professionals who need to transcribe voice memo recordings from mobile devices into accurate, searchable text

In these scenarios, transcription accuracy directly impacts operational quality.

When Otter may still be sufficient

- Daily internal team standups

- Low-risk recurring meetings

- Organizations prioritizing live captions over post-meeting intelligence

- Users are already deeply integrated into automated calendar workflows

Basic transcription may still be acceptable for simple documentation needs.

Mobile productivity and voice capture

Modern professionals rarely operate exclusively from desktop environments. Meeting insights often emerge outside formal conference calls.

Both major transcription ecosystems provide mobile support across iOS and Android platforms, enabling quick voice memo capture.

This capability is especially valuable for:

- Journalists recording field interviews

- Consultants capture client notes immediately after meetings

- Executives recording strategic reflections

- Product managers documenting brainstorming sessions

The ability to instantly convert spoken thoughts into structured, searchable text ensures that valuable insights never disappear.

Real Cost of Inaccurate Transcription

Many organizations underestimate how expensive inaccurate transcription actually is.

Consider the hidden time losses:

- Ten minutes correcting speaker names

- Fifteen minutes fixing technical terms

- Twenty minutes rewriting summaries

- Ten minutes verifying decisions

This can easily exceed the meeting’s duration.

When multiplied across dozens of weekly meetings, inaccurate transcription becomes a serious productivity drain.

Therefore, investing in higher-accuracy systems often yields measurable operational savings.

Final Conclusion

In 2026, the conversation about AI meeting tools is no longer about whether transcription works. It is about whether transcription completely removes work.

Vomo.ai leads the modern productivity category by treating transcription as the foundation of organizational intelligence rather than a final deliverable. By combining high-accuracy recognition engines with conversational AI analysis, it transforms raw meeting audio into structured, searchable knowledge.

Otter.ai remains a familiar and capable legacy option for real-time captions and simple recurring meetings. However, as professional expectations shift toward zero-editing workflows and deep, searchable insight extraction, systems built on newer speech recognition architectures increasingly define the future of meeting productivity.

Ultimately, the best transcription system is the one that lets you finish the meeting and immediately move forward with decisions, actions, and clarity without reopening the transcript for cleanup.

When that happens, transcription stops being documentation and becomes operational acceleration.